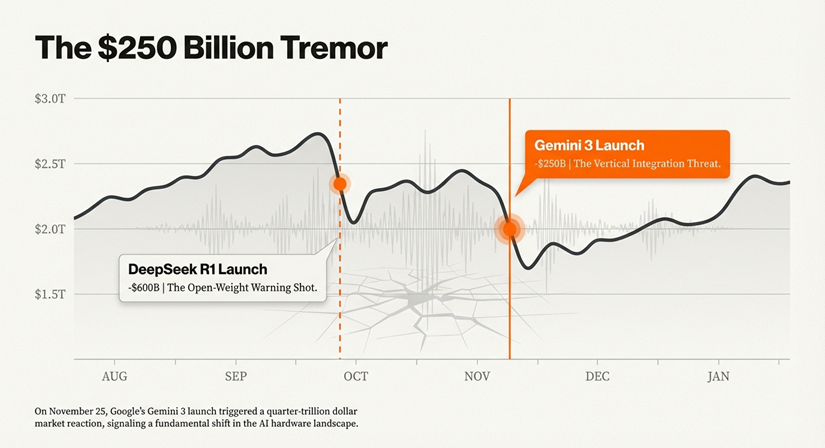

Google's latest AI reasoning model, Gemini 3, has swiftly become a favorite among tech leaders, researchers, and developers, pushing Google to the front of the AI race. The launch of this model sent ripples through the market, reportedly wiping $250 billion off Nvidia's market capitalization. This is a watershed moment in the AI industry.

🚀 What Makes Gemini 3 the New State-of-the-Art?

Gemini 3 includes a series of large language models (LLMs) that represent a quantum leap in AI capabilities. The model family comprises three versions: Gemini 3 Pro, Gemini 3 Pro Image, and Gemini 3 Deep Think reasoning mode. Each is engineered to push the boundaries of what's possible in artificial intelligence.

⚡ Key Capabilities of Gemini 3

- Multimodal Power: Gemini 3 Pro is a multimodal reasoning model capable of understanding text, images, audio, and spatial cues, allowing it to provide better answers to complex questions across different modalities.

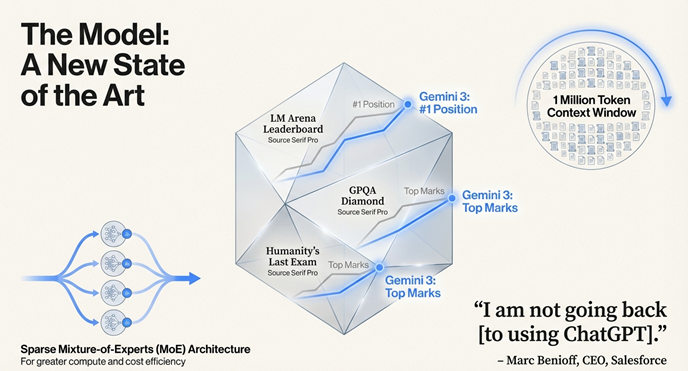

- Massive Context Window: It offers a massive one million-input token context window, enabling users to ask longer and more nuanced questions with greater contextual understanding.

- Compute Efficiency: The model utilizes sparse mixture-of-experts (MoE) architecture, which makes it significantly more compute and cost efficient compared to traditional dense models.

- Top Performance: Early reviews are highly positive, with analysts describing it as the "current state of the art". Gemini 3 topped the LM Arena leaderboard and achieved high marks on several benchmark tests, including GPQA Diamond.

These capabilities represent a fundamental shift in how AI models handle complexity, efficiency, and real-world applications. The combination of multimodal understanding and massive context creates a model that can reason across different types of information simultaneously.

💾 The Hardware Challenge: TPUs vs. GPUs

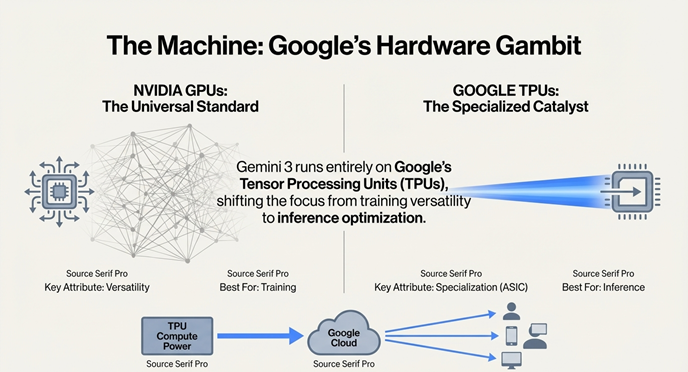

The most meaningful milestone represented by Gemini 3 is that it runs entirely on Google's custom-built Tensor Processing Units (TPUs). This deployment is central to the growing hardware challenge against Nvidia and marks a strategic pivot in how major tech companies are building their AI infrastructure.

⚠️ The Hardware Shift is Real

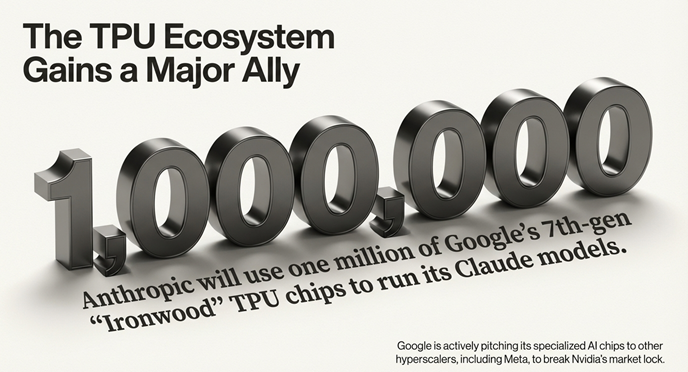

Major technology companies (hyperscalers) like Google, Microsoft, and Amazon are developing in-house, custom Application-Specific Integrated Circuits (ASICs)—such as Google's TPUs and Amazon's Trainium—to reduce their reliance on Nvidia's expensive Graphics Processing Units (GPUs). The goal is clear: cut long-term costs and optimize the model inferencing stage (generating outputs on new data).

📋 TPU Technology Timeline

Google's TPUs, now in their seventh generation, are designed to speed up the massive computations involved in training LLMs considerably compared to CPUs. However, Google does not sell these specialized chips externally; it only makes their compute and processing power available to customers through Google Cloud, creating a proprietary advantage.

🎯 Nvidia's Counter-Strategy: Doubling Down on Dominance

Nvidia, whose GPUs can cost up to $40,000 per unit, quickly responded to the Gemini 3 threat. The company's response reveals both confidence and concern about its market position.

⚔️ Nvidia's Defensive Moves

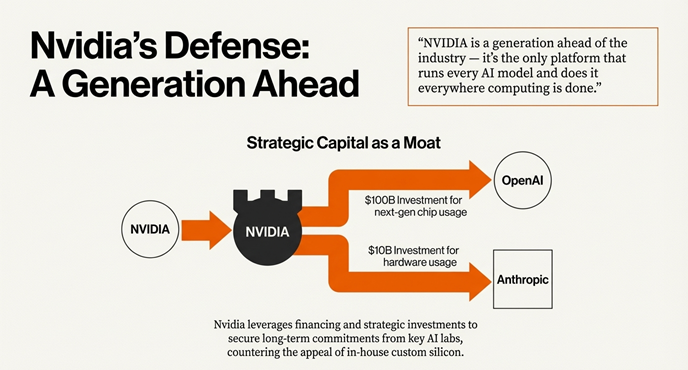

- Claiming Superiority: Nvidia asserted that it is "a generation ahead of the industry" and emphasized that its platform offers superior "performance, versatility, and fungibility" compared to ASICs, which are often limited to specific functions.

- Strategic Investment: To protect its market share, Nvidia has aggressively invested in key customers. For instance, the company announced investments in OpenAI and Anthropic, ensuring that these startups use Nvidia's next-generation hardware alongside any competing chips, such as Google's TPUs.

This strategy reveals Nvidia's understanding of a critical vulnerability: if major AI developers switch to custom chips, the demand for expensive GPUs could collapse. By investing in key startups, Nvidia is essentially hedging its bets and maintaining influence over the AI ecosystem.

TPUs vs. GPUs: A Comparison

| Aspect | Nvidia GPUs | Google TPUs |

|---|---|---|

| Cost per Unit | Up to $40,000+ | Not sold externally (proprietary) |

| Availability | Available to all (universal) | Google Cloud only (limited access) |

| Versatility | General-purpose (many uses) | Specialized (AI/ML optimized) |

| LLM Efficiency | Good (industry standard) | Excellent (custom-built for LLMs) |

| Market Position | Dominant (established ecosystem) | Growing (emerging alternative) |

🏁 The Intensifying AI Race: Two Fronts of Competition

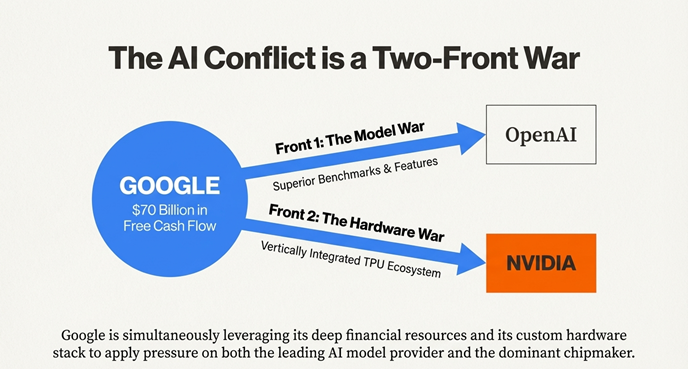

The successful rollout of Gemini 3 marks a significant escalation in the LLM race. Google is currently fighting a two-front battle: challenging Nvidia's hardware dominance while simultaneously leveraging its substantial cash flow to apply pressure on competitors like OpenAI.

💰 Google's Competitive Arsenal

Google's $70 billion cash flow provides enormous strategic flexibility. This capital allows Google to invest in talent, infrastructure, and partnerships while simultaneously undercutting competitors on pricing through its cloud services.

Gemini 3's performance has reportedly caused "rough vibes" and "temporary economic headwinds" for OpenAI. The strategic deployment of TPUs and the resulting market reaction confirm that the competition for AI dominance remains fiercely contested on both the software and hardware fronts.

📊 The Broader Implications

What's happening is a fundamental restructuring of the AI value chain. Instead of relying on a single dominant hardware provider (Nvidia), the industry is diversifying into custom chips, open-source alternatives, and multi-chip strategies. This shift benefits consumers through lower costs and increased competition but creates uncertainty for established players.

🔮 What's Next: The Future of AI Hardware Competition

The battle between Gemini 3/TPUs and Nvidia's GPUs is not just about market share—it's about who will control the infrastructure of AI for the next decade. Several scenarios are possible:

⚡ Possible Future Trajectories

- Diversification Scenario: Multiple companies develop their own custom chips, creating a fragmented but competitive hardware landscape. This benefits customers but increases complexity.

- Nvidia Dominance Persists: Despite competition, Nvidia's ecosystem and performance advantages keep it dominant, similar to how Intel maintained dominance in CPUs despite competition.

- Hybrid Model: Companies use a mix of custom chips and GPUs, with Nvidia maintaining leadership in general-purpose computing but losing market share in specialized AI workloads.

- Open-Source Revolution: Open-source AI chips and models democratize access, reducing Nvidia's leverage and enabling smaller players to compete effectively.

The AI Future is Here

Gemini 3 represents a pivotal moment where software innovation and hardware competition converge. The next few years will determine whether Nvidia maintains its monopoly-like position or whether custom chips and diverse solutions reshape the AI infrastructure landscape forever.

📝 Conclusion: The New AI Order

Google's Gemini 3 is more than just another AI model—it's a symbol of a shifting power dynamic in the technology industry. By proving that custom-built chips can compete with (and potentially surpass) Nvidia's GPUs, Google has opened the door for a new era of competitive innovation.

The $250 billion market cap loss for Nvidia, while potentially temporary, sends a clear signal: the age of unchallenged hardware dominance is over. The AI race will increasingly be won by companies that can innovate on both software and hardware simultaneously.

For developers, enterprises, and investors, this means more choice, more competition, and potentially better economics. For Nvidia, it means the company must innovate faster and more strategically than ever before. For Google and other hyperscalers, it means the opportunity to reshape the AI infrastructure landscape on their own terms.

The AI revolution is accelerating, and the hardware wars have just begun. Buckle up.